Autonomous object manipulation and transportation using a mobile service robot equipped with an RGB-D and LiDAR sensor

The research paper can be seen here:

https://doi.org/10.1117/12.2594025

Abstract

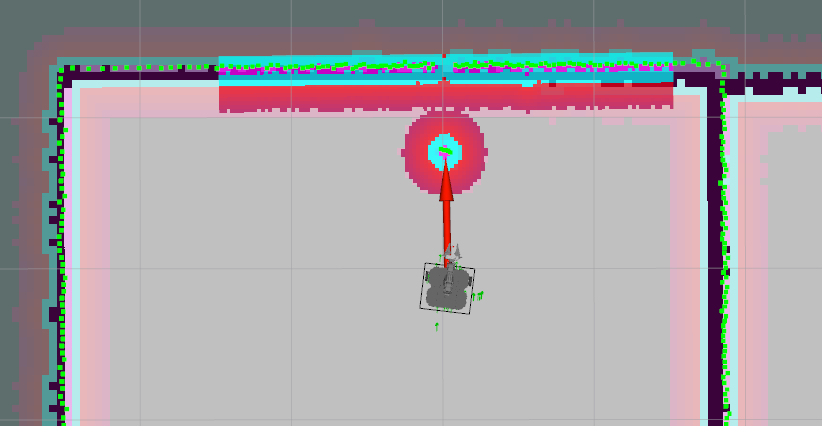

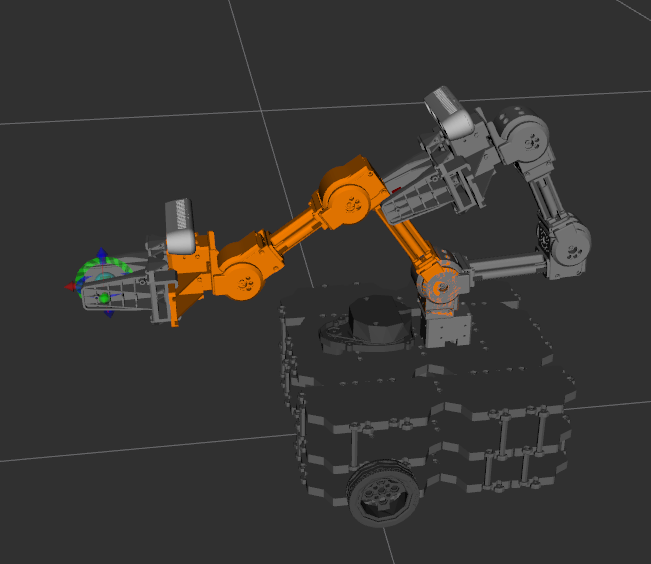

This paper presents the implementation of a mobile service robot with a manipulator and a navigation stack to interact and move through an environment providing a delivery type service. The implementation uses a LiDAR sensor and an RGB-D camera to navigate and detect objects that can be picked up by the manipulator and delivered to a target location. The robot navigation stack includes mapping, localization, obstacle avoidance, and trajectory planning for robust autonomous navigation across an office environment. The manipulator uses the RGB-D camera to recognize specific objects that can be picked up. Experimental results are presented to validate the implementation and robustness

My contribution

Research

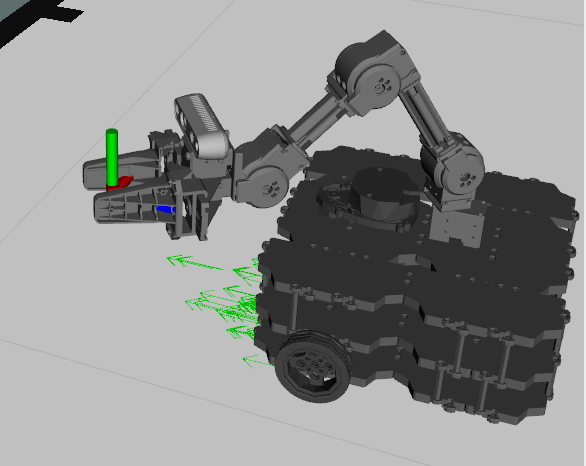

Looking into the ROS libraries and kinematics fundamentals to implement a robust robot arm movement PLUS keeping a global and local reference of the mobile robot. Using SLAM researched last year to do all the navigation, obstacle avoidance and path planning. Looking into robot arm in the market to see which arm best fits a turtlebot a is light enough to be efficient for mobile applications. Also creating a Gazebo model for the robot arm that allows simulation of the robot and arm, using all the physical properties.

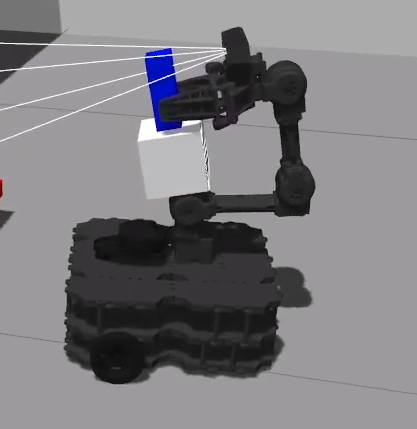

Arm Trajectory planning

Mounted the robotic arm into the robot chassis in the Gazebo simulation and implemented the MoveIt library to provide a complete inverse kinematic solver and trajectory planning to the robot.

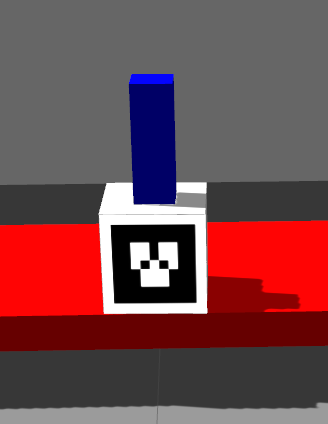

Grasping, computer vision and object localization

Created the computer vision algorithm to pinpoint the object that has an aruco label, then localized it with referenced to the robot to create a grasping trajectory to then execute and grab such object. For this ROS was used to publish the relative locations and TF of the object with relation to the grasping range of the arm, after the location of the object has been published, the algorithm then calculates the best grasping position which is in the blue region of the handle.