Research paper at spie 2020

The research paper can be seen here:

https://doi.org/10.1117/12.2568133

Abstract

This paper presents the implementation of mapping, localization and navigation algorithms for a mobile service robot in an unknown environment. The implementation uses a 3D LiDAR sensor to detect the environment and map an occupancy grid that allows global localization and navigation through the environment. The robot estimates the current position through Monte Carlo localization algorithm with LiDAR sensor and odometry data. The Navigation stack uses inflation to determine if the service robot can safely navigate through the environment, and avoid obstacles. Experimental results were considered using a physical and simulated robot in an indoor environment without prior knowledge of obstacles presented in the environment.

My contribution

ROS implementation of service robot

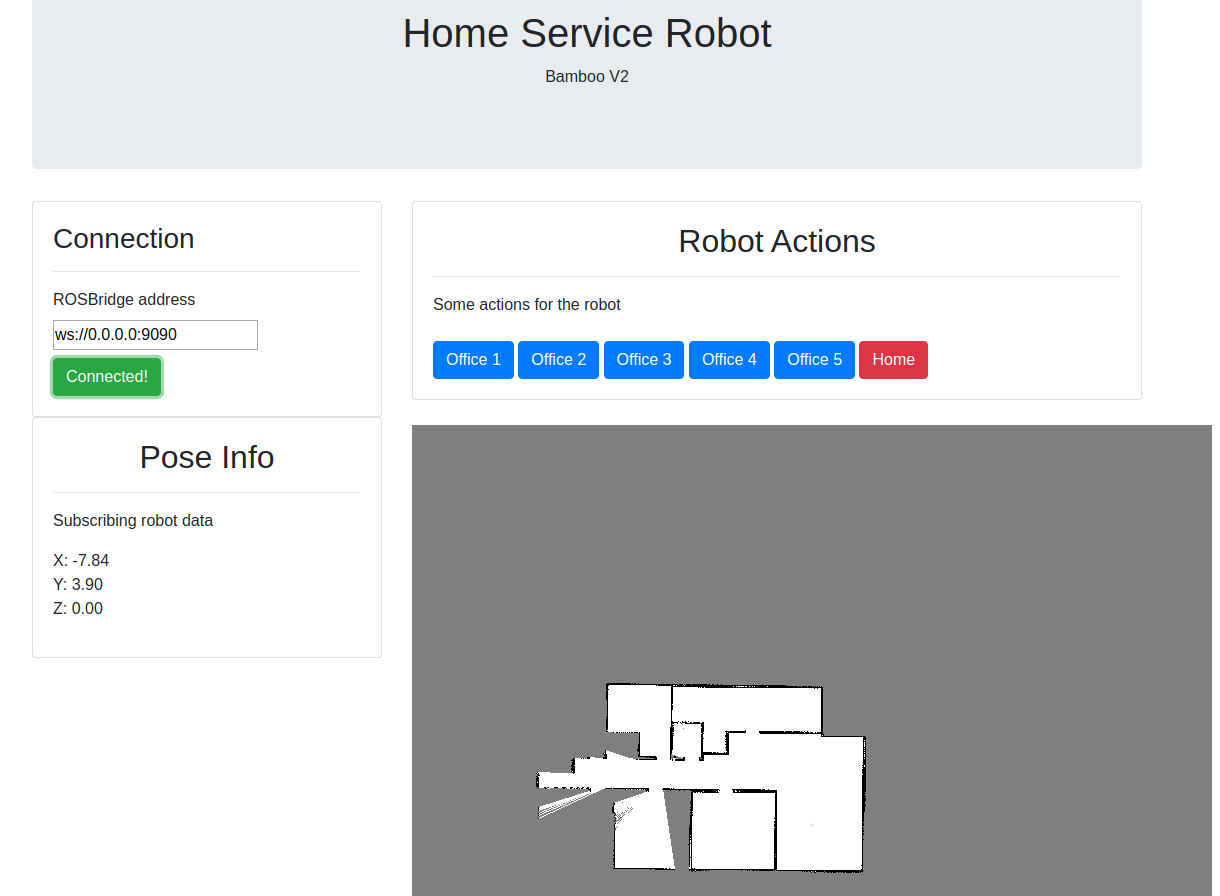

The implementation of a service robot includes the integration of a web user interface that allows the user to have an effect on the behavior of the robot where in this case to order to robot to go to an specific office. It’s important to note that the web server is in the local computing system of the robot, but it can be implemented on an external server or via the cloud which would send MQTT messages to the robot.

Integration of navigation stack with web user interface

The integration of the navigation stack helps the robot navigate across an environment with two types of navigation systems, a local and a global map, where the global takes into consideration the boundaries and obstacles that were previously mapped, while the local works with the lidar to avoid instant obstacles that might soon con into place.

Integration of mapping with frontier exploration

While using the gmapping library on ROS a frontier exploration library was also implemented which allows for the robot to investigate an unknown environment map the totality of the environment. The map of the place is also visualized in the web user interface to aid the user on where the robot is exploring.